On December 7, 2023, Google released the following video on using Rust for Cloud Run applications. This is an excellent getting started video. I have been working directly with the Google Cloud Run team since the beta release. I thought… Continue Reading →

On December 7, 2023, Google released the following video on using Rust for Cloud Run applications. This is an excellent getting started video. I have been working directly with the Google Cloud Run team since the beta release. I thought… Continue Reading →

This article contains my notes on Google Cloud Bigtable and is part of my series on Professional Cloud Architect certification. I plan to take the Professional Cloud Database Engineer certification, so I am starting my training for the PCA with… Continue Reading →

During Covid, I let my Google Cloud certification lapse. Recently, Google Cloud offered me a free exam voucher and $500.00 in Google Cloud credits provided I pass. I accepted their challenge. I have been working in Google Cloud for more… Continue Reading →

This article will be the home page for a collection of articles related to developing C++ applications in Google Cloud. This will include small example applications published to GitHub. I have decades of experience writing commercial enterprise applications in C++…. Continue Reading →

This article is my attempt to keep track of the various environment variables that affect tools, SDKs, and applications written for Google Cloud. GOOGLE_CLOUD_PROJECT Environment variable defining default project. If not set and the environment variable GOOGLE_APPLICATION_CREDENTIALS is set to… Continue Reading →

Introduction This blog is hosted on WordPress. I frequently configure WordPress sites on cloud servers. This article documents various configuration issues that I perform to solve common problems. This article applies to Ubuntu 20.04/22.04 and WordPress 5.8 and newer. These… Continue Reading →

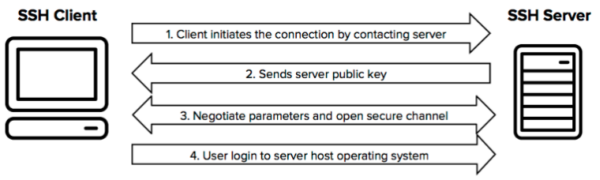

You are connecting to an OpenSSH server using an RSA private key and the following error is displayed:

|

1 |

USERNAME@HOST: Permission denied (publickey,password). |

You check the OpenSSH server logs and find the following entry:

|

1 |

userauth_pubkey: signature algorithm ssh-rsa not in PubkeyAcceptedAlgorithms [preauth] |

You are not able to authenticate with the SSH… Continue Reading →

Bunny.net is an excellent cloud storage and CDN service. I use it for my websites. Bunny offers two primary services: Bunny Storage and Bunny Pull Zones. Combined those two comprise Bunny CDN. There are additional services DNS, Stream (video), and… Continue Reading →

This article discusses DNS configuration problems that prevent Google-managed SSL certificates from being issued for Global HTTP(S) Load Balancers. How do you detect that there is a problem with a Google-managed SSL certificate? The certificate status is Provisioning. The Domain… Continue Reading →

This article is about PyScript and the MongoDB Data API. I have not used the MongoDB Data API before. I know MongoDB and PyScript fairly well, so I thought I would document my journey to see if they will work… Continue Reading →

This article is about MongoDB trivia. If you work with the MongoDB database, you might be interested in some of these tidbits. Credit goes to MongoDB for this idea. They held a talk at their last conference on company trivia…. Continue Reading →

This article is about deploying a PyScript application on Deno Deploy. If you are familiar with Deno and Deno Deploy then you already know that Deno is a JavaScript framework and deploy service. Why deploy PyScript on a JavaScript platform?… Continue Reading →

Some of the PyScript WASM files are large. For example, pyodide.asm.wasm is ~9.5 MB. Correctly setting up your web server to serve these files takes some consideration. You should configure several items in your web server. This article covers Apache… Continue Reading →

PyScript is possible because of WASM. I wanted to see what is involved in calling functions located in a WASM module from Python. Turns out it is very easy to do at least at the function import and export level…. Continue Reading →

Knowing that an error occurred is the first step to preventing and solving errors. There are many types of errors that PyScript applications will experience. Network failures, resources not being available and programming mistakes are just a few problems to… Continue Reading →

PyScript PWA Creating installable PyScript applications that cache assets and runs offline offer enormous potential for Python. In this article, I will show you how to create a Python application that installs on the desktop and on mobile devices. This… Continue Reading →

In PyScript, as well as JavaScript, there are only a few methods of getting data into your program: Read data from the local file system Read data from the network Input data from the user Create data inside your application… Continue Reading →

A recent article by Luciano Abriata criticized PyScript. He made two statements: Pyscript is way too slow and heavy to load. Does not support all of Python’s features and libraries. He then provided two example programs link and link. The… Continue Reading →

In this article, I will show how to use the File System Access API. This API is a web platform API that enables developers to build powerful web apps that interact with files on the user’s local device. There are… Continue Reading →

This article discusses downloading and building PyScript from the source for Ubuntu 20.04 running within Windows WSL. For Linux, skip over the first section covering WSL setup. This article is written for the Python developer with limited experience building JavaScript/Node.js… Continue Reading →

As momentum builds for PyScript, a few good resources are being created. This article is my attempt to keep track of the ones that are very good and/or have detailed knowledge. Please let me know if you know of one… Continue Reading →

This will be the first of several articles that provides details on Pyscript files and file systems. I will discuss the different virtual file systems and how to access files located on the desktop. I have put each example in… Continue Reading →

Introduction If you are familiar with using the Canvas with JavaScript, then you will quickly know how to draw on the Canvas with Python knowing a couple of minor items. If you are a Python developer new to drawing inside… Continue Reading →

Introduction There are times that you want to write pure HTML and JavaScript code and during runtime download and execute Python code based upon various criteria. This article shows how to create the <py-script> tag, load code into the py-script… Continue Reading →